We all know the expression “You can’t manage what you can’t measure“, but do we really understand it?

After execution, feedback is an essential part of all processes. Just think about how difficult it would be to drive from home to work wearing a blindfold. Without your sense of sight to give you feedback on the traffic signals and the locations of other cars you would crash your car. Yet we develop software systems without instituting formal measurement programs all the time and wonder why we succeed so rarely? (for success rates see Understanding your chances of having a successful software project)

After execution, feedback is an essential part of all processes. Just think about how difficult it would be to drive from home to work wearing a blindfold. Without your sense of sight to give you feedback on the traffic signals and the locations of other cars you would crash your car. Yet we develop software systems without instituting formal measurement programs all the time and wonder why we succeed so rarely? (for success rates see Understanding your chances of having a successful software project)

You can’t manage what you can’t measure

No measurement means no feedback, which means your chances of success are minimized. Success is possible without formal measurement but it is much easier with formal measurement.

Formal measurement raises productivity by 20.0% and quality by 30.0%

A best practice is one that increases your chance of succeeding, it does not guarantee it. It has been established that formal measurement is a best practice, so why do so few people do it?

Measurement has a cost and organizations are petrified of incurring costs without incurring benefits. After all what if you institute a measurement program and things don’t improve? In some sense managers are correct that measurement programs cost money to develop and unless measurement is executed correctly it will not yield any results. But is there a downside to avoiding measurement?

Measurement has a cost and organizations are petrified of incurring costs without incurring benefits. After all what if you institute a measurement program and things don’t improve? In some sense managers are correct that measurement programs cost money to develop and unless measurement is executed correctly it will not yield any results. But is there a downside to avoiding measurement?

Inadequate progress tracking reduces productivity by 16.0% and quality by 22.5%

Failure to estimate requirements changes reduces productivity by 14.6% and quality by 19.6%

Inadequate measurement of quality reduces productivity by 13.5% and quality by 18.5%

So there are costs to not having measurement. Measurement is not optional, measurement is a hygiene process, that is, essential to any process but especially to software development where the main product is intangible.

A hygiene process is one which can prevent very bad things from happening. Hygiene processes are rarely fun and take time, i.e. taking a shower, brushing your teeth, etc. But history has show that it is much more cost effective to execute a hygiene process than take a chance of something very bad from happening, i.e. disease or your teeth falling out.

A hygiene process is one which can prevent very bad things from happening. Hygiene processes are rarely fun and take time, i.e. taking a shower, brushing your teeth, etc. But history has show that it is much more cost effective to execute a hygiene process than take a chance of something very bad from happening, i.e. disease or your teeth falling out.

There are hygiene practices that we use every day in software development without even thinking about it:

- Version control

- Defect tracking

Version control is not fun, tracking defects is not fun; but the alternative is terrible. Only the most broken organizations think that they can develop software systems without these tools. These tools are not fun to use and virtually everyone complains about them, but the alternative is complete chaos.

Formal measurement is a best practice and a hygiene practice

The same way that developers understand that version control and defect tracking is necessary, an organization needs to learn that measurement is necessary.

Is Formality Necessary?

The reality is that informal measurement is not comprehensive enough to give consistent results. If measurement is informal then when crunch time comes then people will stop measuring things when you need the data the most.

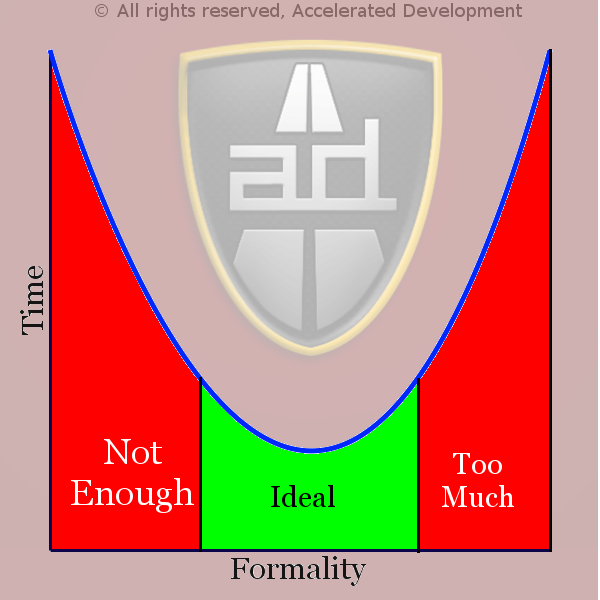

When you don’t have enough formality then processes take longer and by extension cost more. When you have too much formality then you have process for processes sake and things will also take a long time. Any organization that implements too much formality is wasting their time, but so is any organization that does not implement enough.

When you suggest any formal process people immediately imagine the most extreme form of that process; which would be ridiculous if it is implemented that way. We have all been in organizations that implement processes that make no sense, but without measurement how do you get rid of these processes that make no sense? For every formal process that makes sense, there is a spectrum of implementations. The goal is to find the minimum formality that reduces time and costs. When you find the minimum amount of formal measurement you will accelerate your development by giving yourself the feedback that you need to drive your development.

When you suggest any formal process people immediately imagine the most extreme form of that process; which would be ridiculous if it is implemented that way. We have all been in organizations that implement processes that make no sense, but without measurement how do you get rid of these processes that make no sense? For every formal process that makes sense, there is a spectrum of implementations. The goal is to find the minimum formality that reduces time and costs. When you find the minimum amount of formal measurement you will accelerate your development by giving yourself the feedback that you need to drive your development.What to Measure

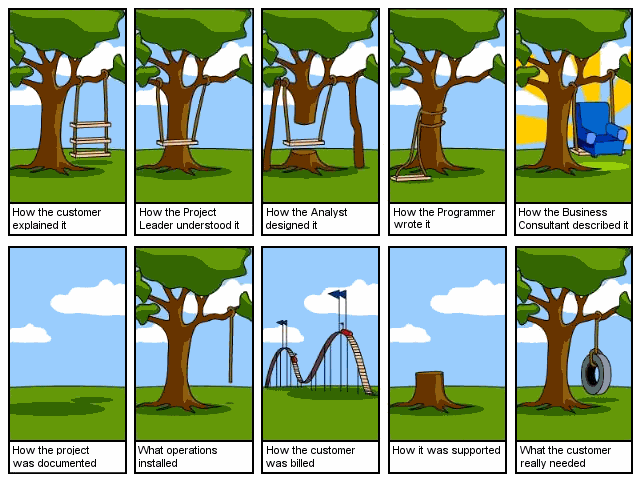

It seems obvious, but incorrect measurement and/or poor execution leads to useless results. For example, trying to measure productivity by measuring the hours that the developers sit at their machines is as useful as measuring productivity by the number of cups of coffee that the developers drink.Another useless measure is lines of code (LOC), in fact, Capers Jones believes that anyone using LOC as a measurement should be tried for professional malpractice!Measuring the the three things mentioned above will improve productivity and quality because there will not be a negative effect on your organization:

It seems obvious, but incorrect measurement and/or poor execution leads to useless results. For example, trying to measure productivity by measuring the hours that the developers sit at their machines is as useful as measuring productivity by the number of cups of coffee that the developers drink.Another useless measure is lines of code (LOC), in fact, Capers Jones believes that anyone using LOC as a measurement should be tried for professional malpractice!Measuring the the three things mentioned above will improve productivity and quality because there will not be a negative effect on your organization:

- Measuring progress tracking (productivity +16.0%, quality +22.5%)

- Estimating requirements changes (productivity +14.6%, quality +19.6%)

- Measurement of quality (productivity +13.5%, quality +18.5%)

Other things to measure are:

- Activity based productivity measures

(productivity +18.0%, quality by 6.7%) - Automated sizing tools (function points)

(productivity +16.5%, quality by 23.7%) - Measuring requirement changes (productivity +15.7%, quality by 21.9%)

So to answer the question: who needs formal measurement?

We all need formal measurement

References

- Jones, Capers. SCORING AND EVALUATING SOFTWARE METHODS, PRACTICES, AND RESULTS. 2008.

N.B. All productivity and quality percentages were derived over 15,000+ actual projects

Articles in the “Loser” series

Want to see sacred cows get tipped? Check out:

Want to see sacred cows get tipped? Check out:

Make no mistake, I am the biggest “Loser” of them all. I believe that I have made every mistake in the book at least once 🙂

developer has an opportunity to plan his code, however, there are many developers who just ‘start coding’ on the assumption that they can fix it later.

developer has an opportunity to plan his code, however, there are many developers who just ‘start coding’ on the assumption that they can fix it later.

that was pioneered by the Eiffel programming language It would be tedious and overkill to use DbC in every routine in a program, however, there are key points in every software program that get used very frequently.

that was pioneered by the Eiffel programming language It would be tedious and overkill to use DbC in every routine in a program, however, there are key points in every software program that get used very frequently.

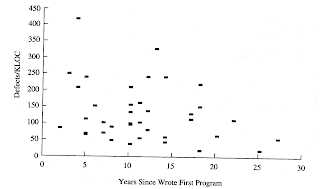

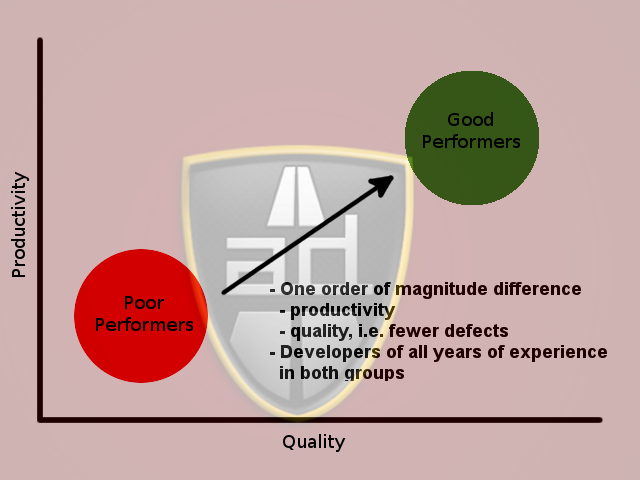

That is the worst programmers and the best programmers made distinct groups and each group had people of low and high experience levels. Whether training helps developers or not is not indicated by these findings, only that years of experience do not matter.

That is the worst programmers and the best programmers made distinct groups and each group had people of low and high experience levels. Whether training helps developers or not is not indicated by these findings, only that years of experience do not matter.

There are 5 worst practices that if stopped immediately will

There are 5 worst practices that if stopped immediately will

To make matters worse, some of the worst practices will cause other worst practices to come into play.

To make matters worse, some of the worst practices will cause other worst practices to come into play.  Friction among managers because of different perspectives on resource allocation, objectives, and requirements. It is much more important for managers to come to a consensus than to fight for the sake of fighting. Not being able to come to a consensus will cave in projects and make ALL the managers look bad. Managers win together and lose together.

Friction among managers because of different perspectives on resource allocation, objectives, and requirements. It is much more important for managers to come to a consensus than to fight for the sake of fighting. Not being able to come to a consensus will cave in projects and make ALL the managers look bad. Managers win together and lose together. Friction among team members because of different perspectives on requirements, design, and priority. It is also much more important for the team to come to a consensus than to fight for the sake of fighting. Again, everyone wins together and loses together — you can not win and have everyone else lose.

Friction among team members because of different perspectives on requirements, design, and priority. It is also much more important for the team to come to a consensus than to fight for the sake of fighting. Again, everyone wins together and loses together — you can not win and have everyone else lose.

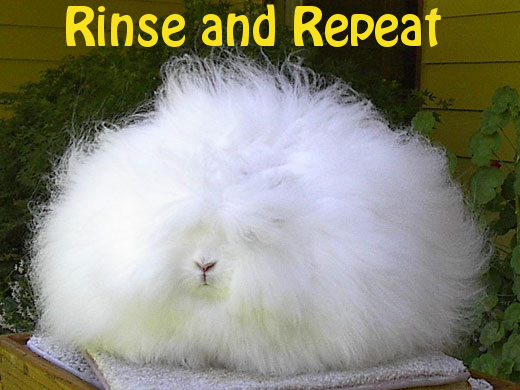

How many times are you going to rinse and repeat this process until you try something different? If you want to break this cycle, then you need to start collecting consistent requirements.

How many times are you going to rinse and repeat this process until you try something different? If you want to break this cycle, then you need to start collecting consistent requirements.