Some say that software development is challenging because of complexity. This might be true, but this definition does not help us find solutions to reduce complexity. We need a better way to explain complexity to non-technical people.

The reality is that when coding a project size matters. Size is measured in the number of pathways through a code base, not by the number of lines of code. Size is proportional to the number of function points in a project.

There are many IT people that succeed with programs of a certain size and then fail miserably when taking on programs that are more sophisticated. Complexity increases with size because the number of pathways increase exponentially in large programs.

Virtually anyone (even CEOs 🙂 ) can build a hello, world! application; an application that only has a single pathway through it and is as simple as you can get. Some CEOs write the simple hello, world! program and incorrectly convince themselves that development is easy. Hello, world! only has a single pathway through it and virtually anyone can write it.

main() {

printf( “hello, world” );

}

If you have an executive that can’t even complete hello,world then you should take away his computer 🙂

Complexity Defined

Complexity Defined

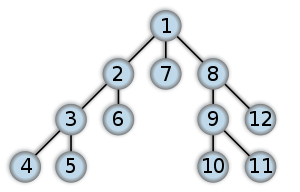

As programs get more sophisticated, the number of decisions that have to be made increase and the depth of the call tree increases. Every non-trivial routine will have multiple pathways through it.

If your average call depth is 10 with an average of 4 pathways through each routine then this represents over 1 million pathways. If the average call depth is 15 then it represents 107 million pathways.

Increasing sophisticated programs have greater call depth than ever and distributed applications increase the call depth even because the call depth of a system is additive. This is what we mean by complexity; it is impossible for us to test all of the different pathways in a black box fashion.

Now in reality every combination of pathways is not possible, but you only have to leave holes in a few routines and you will have hundreds, if not thousands, of pathways where calculations and decisions can go wrong.

In addition, incorrect calculations or decisions higher up in the call tree can lead to difficult to find defects that may blow up much further away from the source of the problem.

What are Defects?

Software defects occur for very simple reasons, an incorrect calculation is performed that causes an output value to be incorrect. Sometimes there is no calculation at all because input data is not validated to be consistent and that data is either stored incorrectly or goes on to cause incorrect calculations to be performed.

We only recognize that we have a defect when we see an output value and recognize that it is incorrect. More likely QA sees it and tells us that we are incorrect.

We only recognize that we have a defect when we see an output value and recognize that it is incorrect. More likely QA sees it and tells us that we are incorrect.

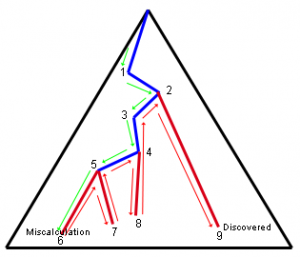

Basically we follow a pathway that is correct through nodes 1, 2, 3, 4, and 5. At point 6 we make a miscalculation calculation, and then we have the incorrect values at points 7 and 8 and discover the problem at node 9.

So once we have a miscalculation, we will either continue to make incorrect calculations or make incorrect decisions and go down the wrong pathways (where we will then make incorrect calculations).

Not all Defects are Equal

It is clear that the more distance there is between a miscalculation and its discover will make defects harder to detect. The longer the call depth the greater the chance that there can be a large distance between the origin and detection, in other words:

Size Matters

Today we build sophisticated systems of many cooperating applications and the call depth is exponential with the size of the system. This is what we mean by complexity in software.

Reducing Complexity

Complexity is reduced for every function where:

- You can identify when inconsistent parameters are passed to a function

- All calculations inside of a function are done correctly

- All decisions through the code are taken correctly

The best way to solve all 3 issues is through formal planning and development.Two methodologies that focus directly on planning at the personal and team level are the Personal Software Process (PSP) and the Team Software Process (TSP) invented by Watts Humphrey.

The best way to solve all 3 issues is through formal planning and development.Two methodologies that focus directly on planning at the personal and team level are the Personal Software Process (PSP) and the Team Software Process (TSP) invented by Watts Humphrey.

Identifying inconsistent parameters is easiest when you use Design By Contract (DbC) , a technique that was pioneered by the Eiffel programming language. It is important to use DbC on all functions that are in the core pathways of an application.

Using Test Driven Development is a sure way to make sure that all calculations inside of a function are done correctly, but only if you write tests for every pathway through a function.

Making sure that all calculations are done correctly inside a function and that correct decisions are make through the code is best done through through code inspections (see Inspections are not Optional and Software Professionals do Inspections).

All techniques that can be used to reduce complexity and prove the correctness of your program are covered in Debuggers are for Losers. N.B. Debuggers as the only formalism will only work well for systems with low call depth and low branching.

Conclusion

Therefore, complexity in software development is about making sure that all the code pathways are accounted for. In increasingly sophisticated software systems the number of code pathways increases exponentially with the call depth. Using formal methods is the only way to account for all the pathways in a sophisticated program; otherwise the number of defects will multiply exponentially and cause your project to fail.

Therefore, complexity in software development is about making sure that all the code pathways are accounted for. In increasingly sophisticated software systems the number of code pathways increases exponentially with the call depth. Using formal methods is the only way to account for all the pathways in a sophisticated program; otherwise the number of defects will multiply exponentially and cause your project to fail.

Only projects with low complexity (i.e. small call depth) can afford to be informal and only use debuggers to get control of the system pathways. As a system gets larger only the use of formal mechanisms can reduce complexity and develop sophisticated systems. Those formal mechanisms include:

- Personal Software Process and Team Software Process

- Design by Contract (via Aspect Oriented Programming)

- Test Driven Development

- Code and Design Inspections

Unfortunately, weak IT leadership, internal politics, and embarrassment over poor estimates will not move the deadline and teams will have pressure put on them by overbearing senior executives to get to the original deadline even though that is not possible.

Unfortunately, weak IT leadership, internal politics, and embarrassment over poor estimates will not move the deadline and teams will have pressure put on them by overbearing senior executives to get to the original deadline even though that is not possible. Work executed on these activities will not advance your project and should not be counted in the total of completed hours. So if 2,000 hours have been spent on activities that don’t advance the project then if 9,000 hours have been done on a 10,000 hour project then you have really done 7,000 hours of the 10,000 hour project and

Work executed on these activities will not advance your project and should not be counted in the total of completed hours. So if 2,000 hours have been spent on activities that don’t advance the project then if 9,000 hours have been done on a 10,000 hour project then you have really done 7,000 hours of the 10,000 hour project and