Developers attach quickly to tools because they are concrete and have well defined behavior. It is easier to learn a tool than to learn good practices or methodology.

Developers attach quickly to tools because they are concrete and have well defined behavior. It is easier to learn a tool than to learn good practices or methodology.

Tools only assist in solving problems, they can’t solve the problem by themselves. A developer who understands the problem can use tools to increase productivity and quality.

Poor developers don’t invest the time or effort to understand how to code properly and avoid defects. They spend their time learning how to use tools without understanding the purpose of the tool or how to use it effectively.

To some degree, this is partially the fault of the tool vendors. The tool vendors perceive an opportunity to make $$$$$ based on providing support for a common problems, such as:

To some degree, this is partially the fault of the tool vendors. The tool vendors perceive an opportunity to make $$$$$ based on providing support for a common problems, such as:

- defect trackers to help you manage defect tracking

- version control systems to manage source code changes

- tools to support Agile development (Version One, JIRA)

- debuggers to help you find defects

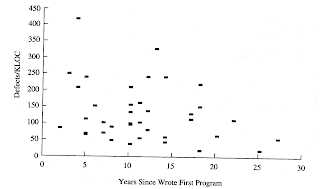

There are many tools out there, but let’s just go through this list and point out where developers and organizations get challenged. Note, all statistics below are derived from over 15,000 projects over 40 years.1

Defect Trackers

Believe it or not, some organizations still don’t have defect tracking software. I’ve run into a couple of these companies and you would not believe why…

Inadequate defect tracking methods: productivity -15%, quality -21%

So we are pretty much all in agreement that we need to have defect tracking; we all know that the ability to manage more than a handful of defects is impossible without some kind of system.

So we are pretty much all in agreement that we need to have defect tracking; we all know that the ability to manage more than a handful of defects is impossible without some kind of system.

Automated defect tracking tools: productivity +18%, quality +26%

The problem is that developers fight over which is the best defect tracking system. The real problem is that almost every defect tracking system is poorly set-up, leading to poor results. Virtually every defect tracking system when configured properly will yield tremendous benefits. The most common pitfalls are:

- Introducing irrelevant attributes into the defect lifecycle status, i.e. creation of statuses like deferred, won’t fix, or functions as designed

- Not being able to figure out if something is fixed or not

- Not understanding who is responsible for addressing a defect

The tool vendors are happy to continue to provide new versions of defect trackers. However, using a defect tracker effectively has more to do with how the tool is used rather than which tool is selected.

One of the most fundamental issues that organizations wrestle with is what is a defect? A defect only exists if the code does not behave according to specifications. But what if there are no specifications or the specifications are bad? See It’s not a bug, it’s… for more information.

One of the most fundamental issues that organizations wrestle with is what is a defect? A defect only exists if the code does not behave according to specifications. But what if there are no specifications or the specifications are bad? See It’s not a bug, it’s… for more information.

Smart organizations understand that the way in which the defect tracker is used will make the biggest difference. Discover how to get more out of you defect tracking system in Bug Tracker Hell and How to Get Out.

Another common problem is that organizations try to manage enhancements and requirements in the defect tracking system. After all whether it is a requirement or a defect it will lead to a code change, so why not put all the information into the defect tracker? Learn why managing requirements and enhancements in the defect tracking system is foolish in Don’t manage enhancements in the bug tracker.

Version Control Systems

Like defect tracking systems most developers have learned that version control is a necessary hygiene procedure. If you don’t have one then you are likely to catch a pretty serious disease (and at the least convenient time)

Like defect tracking systems most developers have learned that version control is a necessary hygiene procedure. If you don’t have one then you are likely to catch a pretty serious disease (and at the least convenient time)

Inadequate change control: productivity -11%, quality -16%

Virtually all developers dislike version control systems and are quite vocal about what they can’t do with their version control system. If you are the unfortunate person who made the final decision on which version control system is used just understand that their are hordes of developers out their cursing you behind your back.

Version control is simply chapter 1 of the story. Understanding how to chunk code effectively, integrate with continuous build technology, and making sure that the defects in the defect tracker refers to the correct version are just as important as the choice of version control system.

Tools to support Agile

Sorry Version One and JIRA, the simple truth is that using an Agile tool does not make you agile, see this.

Sorry Version One and JIRA, the simple truth is that using an Agile tool does not make you agile, see this.

These tools are most effective when you actually understand Agile development. Enough said.

Debuggers

I have written extensively about why debuggers are not the best tools to track down defects. So I’ll try a different approach here.

I have written extensively about why debuggers are not the best tools to track down defects. So I’ll try a different approach here.

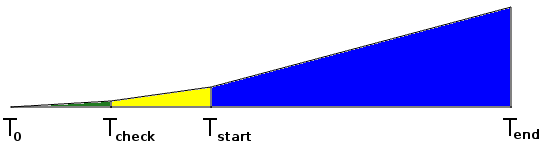

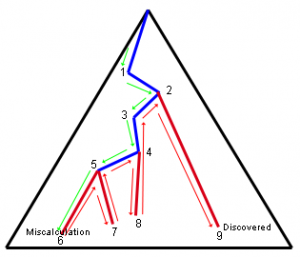

One of the most enduring sets of ratios in software engineering has been 1:10:100. That is, if the cost of tracking down a defect pre-test (i.e. before QA) is 1, then it will cost 10x if the defect is found by QA, and 100x if the defect is discovered in deployment by your customers.

Most debuggers are invoked when the cost function is in the 10x or 100x part of the process. As stated before, it is not that I do not believe in debuggers — I simply believe in using pre-test defect removal strategies because they cost less and lead to higher code quality.

Pre-test defect removal strategies include:

- Planning code, i.e. PSP

- Test driven development, TDD

- Design by Contract (DbC)

- Code inspections

- Pair programming for complex sections of code

You can find more information about this in:

Seldom Used Tools

Tools that can make a big difference but many developers don’t use them:

Automated static analysis: productivity +21%, quality +31%

Automated unit testing: productivity +17%, quality +24%

Automated unit testing generally involves using test driven development (TDD) or data driven development together with continual build technology.

Automated sizing in function points: productivity +17%, quality +24%

Automated quality and risk prediction: productivity +16%, quality +23%

Automated test coverage analysis: productivity +15%, quality +21%

Automated deployment support: productivity +15%, quality +20%

Automated cyclomatic complexity computation: productivity +15%, quality +20%

Important Techniques with No Tools

There are a number of techniques available in software development that tool vendors have not found a way to monetize on. These techniques tend to be overlooked by most developers, even though they can make a huge difference in productivity and quality.

The Personal Software Process and Team Software Process were developed by Watts Humphrey, one of the pioneers of building quality software.

Personal software process: productivity +21%, quality +31%2

Team software process: productivity +21%, quality +31%3

The importance of inspections is covered in:

Code inspections: productivity +21%, quality +31%4

Requirement inspections: productivity +18%, quality +27%4

Formal test plans: productivity +17%, quality +24%

Function point analysis (IFPUG): productivity +16%, quality +22%

Conclusion

There is definitely a large set of developers that assume that using a tool makes them competent.

There is definitely a large set of developers that assume that using a tool makes them competent.

The reality is that learning a tool without learning the principles that underly the problem you are solving is like assuming you can beat Michael Jordan at basketball just because you have great running shoes.

Learning tools is not a substitute for learning how do do something competently. Competent developers are continually learning about techniques that lead to higher productivity and quality, whether or not that technique is supported by a tool.

Learning tools is not a substitute for learning how do do something competently. Competent developers are continually learning about techniques that lead to higher productivity and quality, whether or not that technique is supported by a tool.

References

VN:F [1.9.22_1171]

Rating: 0.0/5 (0 votes cast)

VN:F [1.9.22_1171]

We often hear the customer is always right!, but is this really true? Haven’t we all been in situations where the customer is asking for something unreasonable or is simply downright wrong? Aren’t there times when the customer is dead wrong?

We often hear the customer is always right!, but is this really true? Haven’t we all been in situations where the customer is asking for something unreasonable or is simply downright wrong? Aren’t there times when the customer is dead wrong? Recognizing bad customers is usually not difficult. Transactional customers are often bad customers; especially those that want the lowest price and act as though every product is a commodity; they try to play vendors off against each other despite quality requirements.

Recognizing bad customers is usually not difficult. Transactional customers are often bad customers; especially those that want the lowest price and act as though every product is a commodity; they try to play vendors off against each other despite quality requirements. When sales executives chase all opportunities hoping for a sale is when transactional buyers are courted and you get pulled into pricing concessions from demanding customers. The problem is demanding transactional buyers won’t just ask for the best price, they will also ask for product changes.

When sales executives chase all opportunities hoping for a sale is when transactional buyers are courted and you get pulled into pricing concessions from demanding customers. The problem is demanding transactional buyers won’t just ask for the best price, they will also ask for product changes. Good sales people understand these principles and don’t chase bad customers. But, there are not enough good sales people to go around, so virtually every company has a less-than-excellent sales person making trouble for product management and engineering.

Good sales people understand these principles and don’t chase bad customers. But, there are not enough good sales people to go around, so virtually every company has a less-than-excellent sales person making trouble for product management and engineering. If you find yourself in a position where you have acquired one or more bad customers (you know who they are..) then your best course of action is to find some way to send them to your competitors. This will increase your profitability and reduce the stress of unreasonable requests flooding into product management and engineering.

If you find yourself in a position where you have acquired one or more bad customers (you know who they are..) then your best course of action is to find some way to send them to your competitors. This will increase your profitability and reduce the stress of unreasonable requests flooding into product management and engineering. Don’t be afraid to let unprofitable and non-strategic customers go. You will feel less stressed and be better off in the long run.

Don’t be afraid to let unprofitable and non-strategic customers go. You will feel less stressed and be better off in the long run.

Time pressures to make a sale put us under pressure and this stress leads to making quick decisions about whether a product or solution can be sold to a customer. We listen to the customer but interpret everything he says according to the products and solutions that we have.

Time pressures to make a sale put us under pressure and this stress leads to making quick decisions about whether a product or solution can be sold to a customer. We listen to the customer but interpret everything he says according to the products and solutions that we have. We hear the word car and we think that we know what the customer means. The order-taker sales person will spring into action and sell what he thinks the customer needs. Behind the word car is an implied usage and unless you can ferret out the meaning that the customer has in mind, you are unlikely to sell the correct solution.

We hear the word car and we think that we know what the customer means. The order-taker sales person will spring into action and sell what he thinks the customer needs. Behind the word car is an implied usage and unless you can ferret out the meaning that the customer has in mind, you are unlikely to sell the correct solution. Sometimes heroic actions by the product management, professional services, and software development teams lead to a successful implementation, but usually not until there has been severe pain at the customer and midnight oil burned in your company. You can eventually be successful but that customer will never buy from you again.

Sometimes heroic actions by the product management, professional services, and software development teams lead to a successful implementation, but usually not until there has been severe pain at the customer and midnight oil burned in your company. You can eventually be successful but that customer will never buy from you again. Selling the correct solution to the customer requires that you understand the customer’s problem before you sell the solution.

Selling the correct solution to the customer requires that you understand the customer’s problem before you sell the solution.

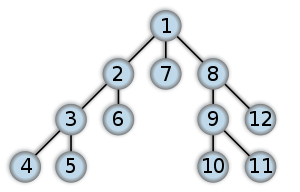

You can see a couple, but only few people can see the entire forest by just looking at the code. For the rest of us, diagrams are the way to see the forest, and UML is the standard for diagrams.

You can see a couple, but only few people can see the entire forest by just looking at the code. For the rest of us, diagrams are the way to see the forest, and UML is the standard for diagrams. Contrast that with software where UML diagrams are rarely produced, or if they are produced, they are produced as an after thought. The irony is that the people pushing to build the architecture quickly say that there is no time to make diagrams, but they are the first people to complain when the architecture sucks. UML is key to planning (see

Contrast that with software where UML diagrams are rarely produced, or if they are produced, they are produced as an after thought. The irony is that the people pushing to build the architecture quickly say that there is no time to make diagrams, but they are the first people to complain when the architecture sucks. UML is key to planning (see  Yet this is where all the architecture is. Good architecture makes all the difference in medium and large systems. Architecture is the glue that holds the software components in place and defines communication through the structure. If you don’t plan the layers and modules of the system then you will continually be making compromises later on.

Yet this is where all the architecture is. Good architecture makes all the difference in medium and large systems. Architecture is the glue that holds the software components in place and defines communication through the structure. If you don’t plan the layers and modules of the system then you will continually be making compromises later on. Good diagrams, in particular UML, allow you to abstract away all the low level details of an implementation and let you focus on planning the architecture. This higher level planning leads to better architecture and therefore better extensibility and maintainability of software.

Good diagrams, in particular UML, allow you to abstract away all the low level details of an implementation and let you focus on planning the architecture. This higher level planning leads to better architecture and therefore better extensibility and maintainability of software. These analysis level UML diagrams will help you to identify gaps in the requirements before moving to design. This way you can send your BAs and product managers back to collect missing requirements when you identify missing elements before you get too far down the road.

These analysis level UML diagrams will help you to identify gaps in the requirements before moving to design. This way you can send your BAs and product managers back to collect missing requirements when you identify missing elements before you get too far down the road.

One of the most fundamental issues that organizations wrestle with is

One of the most fundamental issues that organizations wrestle with is  Like defect tracking systems most developers have learned that version control is a necessary

Like defect tracking systems most developers have learned that version control is a necessary  Sorry Version One and JIRA, the simple truth is that using an Agile tool does not make you agile, see

Sorry Version One and JIRA, the simple truth is that using an Agile tool does not make you agile, see  I have written extensively about why debuggers are not the best tools to track down defects. So I’ll try a different approach here.

I have written extensively about why debuggers are not the best tools to track down defects. So I’ll try a different approach here. There is definitely a large set of developers that assume that using a

There is definitely a large set of developers that assume that using a  Learning tools is not a

Learning tools is not a

Want to see more sacred cows get tipped? Check out:

Want to see more sacred cows get tipped? Check out:

This means that the base rate of success for any software project is only 3 out of 10.

This means that the base rate of success for any software project is only 3 out of 10. When there is a

When there is a  Requirements uncertainty is what leads to

Requirements uncertainty is what leads to  Technical uncertainty exists when it is not clear that all requirements can be

Technical uncertainty exists when it is not clear that all requirements can be  Skills uncertainty comes from using resources that are unfamiliar with the requirements or the implementation technology. Skills uncertainty is a

Skills uncertainty comes from using resources that are unfamiliar with the requirements or the implementation technology. Skills uncertainty is a  An informal business case is possible only if the requirements, technical, and skills uncertainty is low. This only happens in a few situations:

An informal business case is possible only if the requirements, technical, and skills uncertainty is low. This only happens in a few situations: Here is a list of projects that tend to be accepted without any kind of real business case that quantifies the uncertainties:

Here is a list of projects that tend to be accepted without any kind of real business case that quantifies the uncertainties:

The best way to solve all 3 issues is through formal planning and development.Two methodologies that focus directly on planning at the personal and team level are the

The best way to solve all 3 issues is through formal planning and development.Two methodologies that focus directly on planning at the personal and team level are the  Therefore, complexity in software development is about making sure that all the code pathways are accounted for. In increasingly sophisticated software systems the number of code pathways increases exponentially with the call depth. Using formal methods is the only way to account for all the pathways in a sophisticated program; otherwise the number of defects will multiply exponentially and cause your project to fail.

Therefore, complexity in software development is about making sure that all the code pathways are accounted for. In increasingly sophisticated software systems the number of code pathways increases exponentially with the call depth. Using formal methods is the only way to account for all the pathways in a sophisticated program; otherwise the number of defects will multiply exponentially and cause your project to fail.