3 of 10 software projects fail, 3 succeed, and 4 are ‘challenged’1. When projects fail because you cut corners and exceed your capabilities then — you get what you deserve. You don’t deserve pity when you do it to yourself.

3 of 10 software projects fail, 3 succeed, and 4 are ‘challenged’1. When projects fail because you cut corners and exceed your capabilities then — you get what you deserve. You don’t deserve pity when you do it to yourself.

We estimate that between $3 trillion and $6 trillion dollars are wasted every year in IT. Most of this is wasted by organizations that are unskilled and unaware that they are ignorant.

Warning this article is long!

However, there are organizations that succeed regularly because they understand development, implement best practices, and avoid worst practices (see Understanding Your Chances).

In fact, McKinsey and Company in 2012 stated:

A study of 5,400 large scale IT projects finds that the well known problems with IT Project Management are persisting. Among the key findings quoted from the report:

- 17 percent of large IT projects go so badly that they can threaten the very existence of the company

- On average, large IT projects run 45 percent over budget and 7 percent over time, while delivering 56 percent less value than predicted

Projects fail consistently because organizations choose bad practices and avoid best practices and wonder why success is elusive (see Stop It! No… really stop it. to understand the common worst 5 practices)

Projects fail consistently because organizations choose bad practices and avoid best practices and wonder why success is elusive (see Stop It! No… really stop it. to understand the common worst 5 practices)

What is amazing is that failures do not prompt the incompetent to learn why they failed.

Even worse, after the post-fail finger pointing ceremony, people just dust themselves off and rinse and repeat.

Even worse, after the post-fail finger pointing ceremony, people just dust themselves off and rinse and repeat.

The reality is that we have 60 years of experience in building software systems. Pioneers like Watts Humprey, M.. E. Fagan, Capers Jones, Tom DeMarco, Ed Yourdon, and institutions like the Software Engineering Institute (SEI) have demonstrated that software complexity can be tamed and that projects can be successful2.

The worst developers are not even aware that there is clear evidence about what works or what doesn’t in software projects. Of course, let’s not let the evidence get in the way of their opinions.

Ingredients of a Successful Project

Ingredients of a Successful Project

Successful software projects generally have all the following characteristics:

- Proper business case justification and good capital budgeting

- Very good core requirements for primary functionality

- Effective sizing techniques used before executing the project

- Appropriate project management to the size of the project and to the philosophy of the organization

- Properly trained personnel

- Focus on pre-test defect removal

Every missing characteristic reduces your chance of success by an order of magnitude. If you know that one or more of these characteristics are missing then you get what you deserve!

Every missing characteristic reduces your chance of success by an order of magnitude. If you know that one or more of these characteristics are missing then you get what you deserve!

Missing some of these elements doesn’t guarantee failure, but it severely decreases your chance at success.

Let’s go through these elements in order.

This article is very long, so this is a good place to bail if you don’t have time.

Proper Business Case

This is the step that many failed projects skip over, the hard work behind determining if a project is viable or not.

This is the step that many failed projects skip over, the hard work behind determining if a project is viable or not.

Organizations take the Field of Dreams approach, i.e. “If you build it, they will come...” and skip this step due to ignorance, often resulting from executives who do not understand software (see No Business Case == Project Failure). These are executives that do not have experience with software projects and assume that their force of personality can will software projects to success.

Some organizations claim to build business cases, but these documents are worthless. I even know of public companies that write the business case AFTER the project has started, simply to satisfy Sarbanes-Oxley requirements.

A proper business case attempts to quantify the requirements and technical uncertainty of a software project. It does due diligence into what problem is being solved and who it is solving the problem for. It at least verifies with a little effort that the cash flows resulting from the project will be NPV positive.

Business cases are generally difficult to write because they involve getting partial information. This can be very difficult if your analysts are substandard (see When BA means B∪ll$#!t Artist).

Very Good Core Requirements

Once a project has a proper business case then you need to capture the skeleton of the core requirements. This is a phase where you determine the primary actors of the system and work out major use case names.

Once a project has a proper business case then you need to capture the skeleton of the core requirements. This is a phase where you determine the primary actors of the system and work out major use case names.

Why expand requirements before starting the project?

Executives have a business to run and need to know when software will be available. If you don’t know how big your project is then you can’t create an effective project plan. You don’t want to capture all the requirements so core requirements (i.e. a good skeleton) helps you to size the project without having to get the detailed requirements.

This is why executives like the waterfall methodology. On the surface, this methodology seems to have a predictable timeline — which is what they need to synchronize other parts of the business. The problem is that the waterfall methodology DOES NOT WORK (see last page).

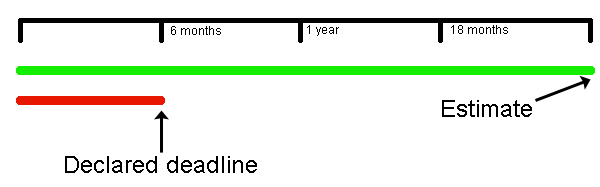

The only way for managers to get a viable estimate of a software project is to expand the business case into requirements that allow you to determine the project’s size before you start it.

This process is just like determining the cost for a house by the square footage and the quality, i.e. 2500 sq. ft at normal quality (~$200 per sq. ft.) would be approximately $500K, even without detailed blueprints. Very accurate estimates can be derived by sizing a project using function points.

This process is just like determining the cost for a house by the square footage and the quality, i.e. 2500 sq. ft at normal quality (~$200 per sq. ft.) would be approximately $500K, even without detailed blueprints. Very accurate estimates can be derived by sizing a project using function points.

Effective Estimation

Now that you have core requirements, you can determine the size of the project and get an approximate cost. You are fooling yourself if you think that you can size large projects without formal estimates (see Who needs formal measurement?)

Now that you have core requirements, you can determine the size of the project and get an approximate cost. You are fooling yourself if you think that you can size large projects without formal estimates (see Who needs formal measurement?)

Just like you can determine the approximate cost of a house if you know the square footage and the quality, you can estimate a software project pretty accurately if you know how many function points (i.e. square footage) and quality requirements of the project3.

There is so much literature available on how to effectively size projects, so do yourself a favor and look it up. N.B. There are quite a few reliable tools for an accurate estimate of software projects, i.e. COCOMO II, SLIM, SEER-SEM. See also Namcook Analytics,

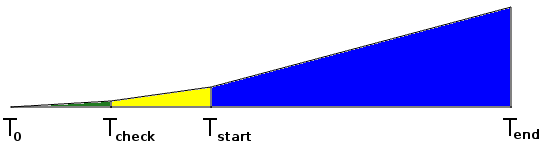

If you don’t size a project then your project plan predicts nothing

Of course, you could always try a management declared deadline which is guaranteed to fail (see Why Senior Management Declared Deadlines lead to Disaster)

Appropriate Project Management

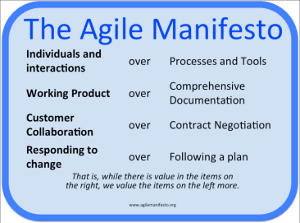

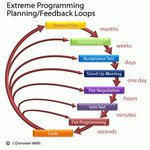

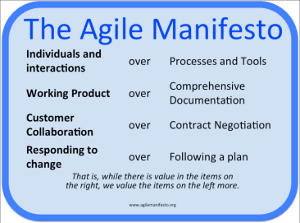

You must select a project methodology appropriate to the organization. Many developers are trying to push their organizations towards Agile software development, although many developers are actually quite clueless about what Agile development is.

You must select a project methodology appropriate to the organization. Many developers are trying to push their organizations towards Agile software development, although many developers are actually quite clueless about what Agile development is.

Agile software development needs buy-in from the top of the organization. Agile software development will probably do very little for you if you are not doing business cases and gathering core requirements before a project.

Discover how developers who claim that they are ‘Agile’ have fooled themselves into thinking that they are doing Agile development. (see Does Agile hide Development Sins?)

Trained Personnel

Management often confuses seniority with competence. After all, if someone has been with the company for 10 years they must be competent, no? The reality is that most people with 10 years of experience only have 1 year repeated 10 times. They are no more skilled then someone with 1 year under their belt.

Management often confuses seniority with competence. After all, if someone has been with the company for 10 years they must be competent, no? The reality is that most people with 10 years of experience only have 1 year repeated 10 times. They are no more skilled then someone with 1 year under their belt.

Learn why in general it may be useful to get rid of older developers that are not productive (see No Experience Required!). Also when it comes to development, you are definitely better off with people that do not rush to write code (see Productive Developers are Smart and Lazy)

Focus on Pre-Test Defect Removal

I’ve written extensively on pre-test defect removal, see Are Debuggers Crutches? for more information.

Conclusion

It is likely that you know all these ingredients that make for a successful project, you’ve just assumed that even though all these characteristics are not present that you just can’t fail.

Quite often projects fail under the leadership of confident people who are incompetent and don’t even know that they are incompetent. If you want to know why intelligent people often do unintelligent things see Are You are Surrounded by Idiots? Unfortunately, You Might be the Idiot..

There probably are projects that fail out there because of circumstances out of their control (i.e. natural disasters, etc) but in most failed projects you get what you deserve!

Fallacy of the Waterfall Methodology

The waterfall methodology is widely attributed to Winston W. Royce.

The waterfall methodology is widely attributed to Winston W. Royce.

The irony is that the paper he published actually concludes that:

In my experience, however, the simpler method (i.e. waterfall) has never worked on large software development and efforts and the costs to recover far exceeded those required to finance the five step process listed.

That is Mr. Royce said that the waterfall process would never work. So much for the geniuses that only read the first 2 pages of the paper and then proceeded to create the “waterfall method” and cost organizations trillions of dollars in failed projects each year.

The waterfall methodology was pushed down our throats by ignorant managers that saw that the waterfall seemed to mimic factory processes. Because this was the process they understood, they icorrectly assumed that this was the right way to develop software.

If any of these guys had bothered to read more than 2 pages from the Royce paper they would have realized that they were making a colossal blunder.

Back to article

1 Challenged means that the project goes significantly over time or budget. In my estimation, ‘challenged’ simply means politically declaring victory on a project that has really failed.

2 This applies to projects that are 10,000 function points or less. We still have problems with projects that are larger than this, but the vast majority of projects are under this threshold.

3 Quality requirements depend on how reliable the project must be. If the risk is that someone might die because of a software malfunction the quality, and therefore cost, must be much higher than if software failures only constitute an annoyance.

VN:F [1.9.22_1171]

Rating: 0.0/5 (0 votes cast)

VN:F [1.9.22_1171]

Alistair Cockburn says “A user story is to a use case as a gazelle is to a gazebo“.

User stories come from the Extreme Programming methodology where the assumption was that there will be a high degree of interaction between the developers and the end customer and that QA will largely be done through test driven development. It is important to realize that Extreme Programming does not scale.

User stories come from the Extreme Programming methodology where the assumption was that there will be a high degree of interaction between the developers and the end customer and that QA will largely be done through test driven development. It is important to realize that Extreme Programming does not scale. method of capturing requirements. You can still use user stories for new modules

method of capturing requirements. You can still use user stories for new modules So don’t forgo the speed and dynamic nature of user stories, just recognize that there are limits to user stories and that you will need to transition to use cases when your project team or application grows.

So don’t forgo the speed and dynamic nature of user stories, just recognize that there are limits to user stories and that you will need to transition to use cases when your project team or application grows.

We often hear

We often hear  Recognizing bad customers is usually not difficult.

Recognizing bad customers is usually not difficult.  When sales executives chase all opportunities hoping for a sale is when transactional buyers are courted and you get pulled into pricing concessions from demanding customers. The problem is demanding transactional buyers won’t just ask for the best price, they will also ask for product changes.

When sales executives chase all opportunities hoping for a sale is when transactional buyers are courted and you get pulled into pricing concessions from demanding customers. The problem is demanding transactional buyers won’t just ask for the best price, they will also ask for product changes. Good sales people understand these principles and don’t chase bad customers. But, there are not enough good sales people to go around, so virtually every company has a less-than-excellent sales person making trouble for product management and engineering.

Good sales people understand these principles and don’t chase bad customers. But, there are not enough good sales people to go around, so virtually every company has a less-than-excellent sales person making trouble for product management and engineering. If you find yourself in a position where you have acquired one or more bad customers (you know who they are..) then your best course of action is to find some way to send them to your competitors. This will increase your profitability and reduce the stress of unreasonable requests flooding into product management and engineering.

If you find yourself in a position where you have acquired one or more bad customers (you know who they are..) then your best course of action is to find some way to send them to your competitors. This will increase your profitability and reduce the stress of unreasonable requests flooding into product management and engineering. Don’t be afraid to let unprofitable and non-strategic customers go. You will feel less stressed and be better off in the long run.

Don’t be afraid to let unprofitable and non-strategic customers go. You will feel less stressed and be better off in the long run.

You can see a couple, but only few people can see the entire forest by just looking at the code. For the rest of us, diagrams are the way to see the forest, and UML is the standard for diagrams.

You can see a couple, but only few people can see the entire forest by just looking at the code. For the rest of us, diagrams are the way to see the forest, and UML is the standard for diagrams. Contrast that with software where UML diagrams are rarely produced, or if they are produced, they are produced as an after thought. The irony is that the people pushing to build the architecture quickly say that there is no time to make diagrams, but they are the first people to complain when the architecture sucks. UML is key to planning (see

Contrast that with software where UML diagrams are rarely produced, or if they are produced, they are produced as an after thought. The irony is that the people pushing to build the architecture quickly say that there is no time to make diagrams, but they are the first people to complain when the architecture sucks. UML is key to planning (see  Yet this is where all the architecture is. Good architecture makes all the difference in medium and large systems. Architecture is the glue that holds the software components in place and defines communication through the structure. If you don’t plan the layers and modules of the system then you will continually be making compromises later on.

Yet this is where all the architecture is. Good architecture makes all the difference in medium and large systems. Architecture is the glue that holds the software components in place and defines communication through the structure. If you don’t plan the layers and modules of the system then you will continually be making compromises later on. Good diagrams, in particular UML, allow you to abstract away all the low level details of an implementation and let you focus on planning the architecture. This higher level planning leads to better architecture and therefore better extensibility and maintainability of software.

Good diagrams, in particular UML, allow you to abstract away all the low level details of an implementation and let you focus on planning the architecture. This higher level planning leads to better architecture and therefore better extensibility and maintainability of software. These analysis level UML diagrams will help you to identify gaps in the requirements before moving to design. This way you can send your BAs and product managers back to collect missing requirements when you identify missing elements before you get too far down the road.

These analysis level UML diagrams will help you to identify gaps in the requirements before moving to design. This way you can send your BAs and product managers back to collect missing requirements when you identify missing elements before you get too far down the road.

When executives

When executives

This process is just like determining the cost for a house by the square footage and the quality, i.e. 2500 sq. ft at normal quality (~$200 per sq. ft.) would be approximately $500K, even without detailed blueprints. Very accurate estimates can be derived by sizing a project using function points.

This process is just like determining the cost for a house by the square footage and the quality, i.e. 2500 sq. ft at normal quality (~$200 per sq. ft.) would be approximately $500K, even without detailed blueprints. Very accurate estimates can be derived by sizing a project using function points.

The waterfall methodology is widely attributed to

The waterfall methodology is widely attributed to

One of the most fundamental issues that organizations wrestle with is

One of the most fundamental issues that organizations wrestle with is  Like defect tracking systems most developers have learned that version control is a necessary

Like defect tracking systems most developers have learned that version control is a necessary  Sorry Version One and JIRA, the simple truth is that using an Agile tool does not make you agile, see

Sorry Version One and JIRA, the simple truth is that using an Agile tool does not make you agile, see  I have written extensively about why debuggers are not the best tools to track down defects. So I’ll try a different approach here.

I have written extensively about why debuggers are not the best tools to track down defects. So I’ll try a different approach here. There is definitely a large set of developers that assume that using a

There is definitely a large set of developers that assume that using a  Learning tools is not a

Learning tools is not a

This means that the base rate of success for any software project is only 3 out of 10.

This means that the base rate of success for any software project is only 3 out of 10. When there is a

When there is a  Requirements uncertainty is what leads to

Requirements uncertainty is what leads to  Technical uncertainty exists when it is not clear that all requirements can be

Technical uncertainty exists when it is not clear that all requirements can be  Skills uncertainty comes from using resources that are unfamiliar with the requirements or the implementation technology. Skills uncertainty is a

Skills uncertainty comes from using resources that are unfamiliar with the requirements or the implementation technology. Skills uncertainty is a  An informal business case is possible only if the requirements, technical, and skills uncertainty is low. This only happens in a few situations:

An informal business case is possible only if the requirements, technical, and skills uncertainty is low. This only happens in a few situations: Here is a list of projects that tend to be accepted without any kind of real business case that quantifies the uncertainties:

Here is a list of projects that tend to be accepted without any kind of real business case that quantifies the uncertainties: What the Heck are Non-Functional Requirements?

What the Heck are Non-Functional Requirements?

This is true whether you use an object-oriented language or not. Non-functional requirements involve everything that surrounds a functional code unit. Non-functional requirements concern things that involve time, memory, access, and location:

This is true whether you use an object-oriented language or not. Non-functional requirements involve everything that surrounds a functional code unit. Non-functional requirements concern things that involve time, memory, access, and location: Availability is about making sure that a service is available when it is supposed to be available. Availability is about a

Availability is about making sure that a service is available when it is supposed to be available. Availability is about a  Capacity is about delivering enough functionality when required. If you ask a web service to supply 1,000 requests a second when that server is only capable of 100 requests a second then some requests will get dropped. This may look like an availability issue, but it is caused because you can’t handle the capacity requested.

Capacity is about delivering enough functionality when required. If you ask a web service to supply 1,000 requests a second when that server is only capable of 100 requests a second then some requests will get dropped. This may look like an availability issue, but it is caused because you can’t handle the capacity requested. Continuity involves being able to be robust against major interruptions to a service, these include power outages, floods or fires in an operational center, or any other disaster that can disrupt the network or physical machines.

Continuity involves being able to be robust against major interruptions to a service, these include power outages, floods or fires in an operational center, or any other disaster that can disrupt the network or physical machines.

Commonly start-ups are so busy setting up their services that the put non-functional requirements on the

Commonly start-ups are so busy setting up their services that the put non-functional requirements on the  Make no mistake, operations and help desk personnel are fairly resourceful and have learned how to manage software where non-functional requirements are not handled by the code. Hardware and OS solutions exist for making up for poorly written software that assumes single machines or does not take into account the environment that the code is running in, but that can come at a fairly

Make no mistake, operations and help desk personnel are fairly resourceful and have learned how to manage software where non-functional requirements are not handled by the code. Hardware and OS solutions exist for making up for poorly written software that assumes single machines or does not take into account the environment that the code is running in, but that can come at a fairly  As development progresses we inevitably run into functionality gaps that are either deemed as

As development progresses we inevitably run into functionality gaps that are either deemed as

Enhancements may or may not become code changes. Even when enhancements turn into code change requests they will generally not be implemented as the developer or QA think they should be implemented.

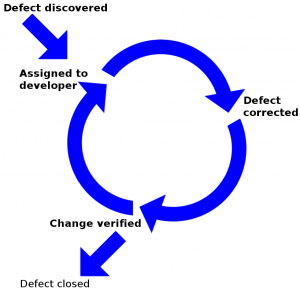

Enhancements may or may not become code changes. Even when enhancements turn into code change requests they will generally not be implemented as the developer or QA think they should be implemented. The creation of requirements and test defects in the bug tracker goes a long way to cleaning up the bug tracker. In fact, requirements and test defects represent about 25% of defects in most systems (see

The creation of requirements and test defects in the bug tracker goes a long way to cleaning up the bug tracker. In fact, requirements and test defects represent about 25% of defects in most systems (see