Defects are common, but they are not not necessary. They find their way into code because:

Defects are common, but they are not not necessary. They find their way into code because:

- Code pathways are not planned

- Developers are inattentive when coding

- Developers do not understand the requirements

Defects are only corrected by understanding pathways and debuggers are not the best way to do this.

Debuggers are commonly used by developer’s to understand a problem, but just because they are common does not make them the best way to find defects. I’m not advocating a return to “the good old days” but there was a time when we did not have debuggers and we managed to debug programs.

Avoid Defects

The absolute best way to remove defects is simply not to create them in the first place. You can be skeptical, but things like the Personal Software Process (PSP) have been used practically to prevent 1 of every 2 defects from getting into your code. Over thousands of projects:

The Personal Software Process increases productivity by 21% and increases code quality by 31%

A study conducted by NIST in 2002 reports that software bugs cost the U.S. economy $59.5 billion annually. This huge waste could be cut in half if all developers focused on not creating defects in the first place.

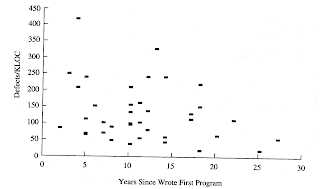

Not only does the PSP focus on code planning, it also makes developers aware of how many defects they actually create. Here are two graphs that show the same group of developers and their defect injection rates before and after PSP training.

|

|

| Before PSP training | After PSP training |

Finding Defects

Using a debugger to understand the source of a defect is definitely one way. But if it is the best way then why do poor developers spend 25 times more time in the debugger than a a good developer? (see No Experience Required!)

Using a debugger to understand the source of a defect is definitely one way. But if it is the best way then why do poor developers spend 25 times more time in the debugger than a a good developer? (see No Experience Required!)

That means that poor developers spend a week in the debugger for every 2 hours that good developer does.

No one is saying that debuggers do not have their uses. However, a debugger is a tool and is only as good as the person using it. Focus on tools obscures lack of skill (see Agile Tools do NOT make you Agile)

If you are only using a debugger to understand defects then you will be able to remove a maximum of about 85% of all defects, i.e. 1 in 7 defects will always be present in your code.

Would it surprise you to learn that their are organizations that achieve 97% defect removal? Software inspections take the approach of looking for all defects in code and getting rid of them.

Would it surprise you to learn that their are organizations that achieve 97% defect removal? Software inspections take the approach of looking for all defects in code and getting rid of them.

Learn more about software inspections and why they work here:

Software inspections increase productivity by 21% and increases code quality by 31%

Even better, people trained in software inspections tend to inject fewer defects into code. When you become adept at parsing code for defects then you become much more aware of how defects get into code in the first place.

But interestingly enough, not only will developers inject fewer defects into code and achieve defect removal rates of up to 97%, in addition:

Every hour spent in code inspections reduces formal QA by 4 hours

Conclusion

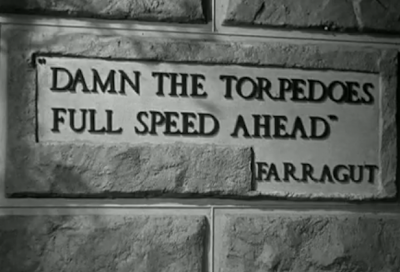

![]() As stated above, there are times where a skilled professional will use a debugger correctly. However, if you are truly interested in being a software professional then:

As stated above, there are times where a skilled professional will use a debugger correctly. However, if you are truly interested in being a software professional then:

- You will learn how to plan and think through code before using the keyboard

- You will learn and execute software inspections

- You will learn techniques like PSP which lead to you injecting fewer defects into the code

You are using a debugger as a crutch if it is your primary tool to reduce and remove defects.

Related Articles

Want to see more sacred cows get tipped? Check out:

Want to see more sacred cows get tipped? Check out:

- Comments are for Losers

- Efficiency is for Losers

- Debuggers are for Losers

- Testing departments are for Losers

Make no mistake, I am the biggest “Loser” of them all. I believe that I have made every mistake in the book at least once 🙂

References

- Gilb, Tom and Graham, Dorothy. Software Inspections

- Jones, Capers. SCORING AND EVALUATING SOFTWARE METHODS, PRACTICES, AND RESULTS. 2008.

- Radice, Ronald A. High Quality

Programmers seem to be fairly productive people. You always see them typing at their desks; they chafe for meetings to finish so that they can go back to their desks and

Programmers seem to be fairly productive people. You always see them typing at their desks; they chafe for meetings to finish so that they can go back to their desks and

This means that the base rate of success for any software project is only 3 out of 10.

This means that the base rate of success for any software project is only 3 out of 10. When there is a

When there is a  Requirements uncertainty is what leads to

Requirements uncertainty is what leads to  Technical uncertainty exists when it is not clear that all requirements can be

Technical uncertainty exists when it is not clear that all requirements can be  Skills uncertainty comes from using resources that are unfamiliar with the requirements or the implementation technology. Skills uncertainty is a

Skills uncertainty comes from using resources that are unfamiliar with the requirements or the implementation technology. Skills uncertainty is a  An informal business case is possible only if the requirements, technical, and skills uncertainty is low. This only happens in a few situations:

An informal business case is possible only if the requirements, technical, and skills uncertainty is low. This only happens in a few situations: Here is a list of projects that tend to be accepted without any kind of real business case that quantifies the uncertainties:

Here is a list of projects that tend to be accepted without any kind of real business case that quantifies the uncertainties:

The only possible conclusion is that senior management can’t conceive of a their projects failing. They must believe that every software project that they initiate will be successful, that other people fail but that they are in the 3 out of 10 that succeed.

The only possible conclusion is that senior management can’t conceive of a their projects failing. They must believe that every software project that they initiate will be successful, that other people fail but that they are in the 3 out of 10 that succeed.

The best way to solve all 3 issues is through formal planning and development.Two methodologies that focus directly on planning at the personal and team level are the

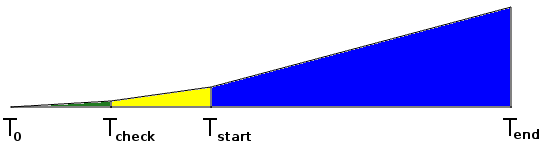

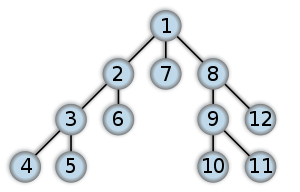

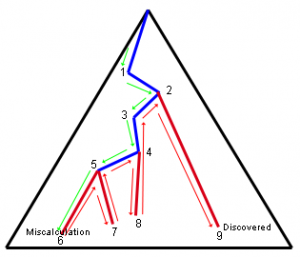

The best way to solve all 3 issues is through formal planning and development.Two methodologies that focus directly on planning at the personal and team level are the  Therefore, complexity in software development is about making sure that all the code pathways are accounted for. In increasingly sophisticated software systems the number of code pathways increases exponentially with the call depth. Using formal methods is the only way to account for all the pathways in a sophisticated program; otherwise the number of defects will multiply exponentially and cause your project to fail.

Therefore, complexity in software development is about making sure that all the code pathways are accounted for. In increasingly sophisticated software systems the number of code pathways increases exponentially with the call depth. Using formal methods is the only way to account for all the pathways in a sophisticated program; otherwise the number of defects will multiply exponentially and cause your project to fail.

Unfortunately, weak IT leadership, internal politics, and embarrassment over poor estimates will not move the deadline and teams will have pressure put on them by overbearing senior executives to get to the original deadline even though that is not possible.

Unfortunately, weak IT leadership, internal politics, and embarrassment over poor estimates will not move the deadline and teams will have pressure put on them by overbearing senior executives to get to the original deadline even though that is not possible. Work executed on these activities will not advance your project and should not be counted in the total of completed hours. So if 2,000 hours have been spent on activities that don’t advance the project then if 9,000 hours have been done on a 10,000 hour project then you have really done 7,000 hours of the 10,000 hour project and

Work executed on these activities will not advance your project and should not be counted in the total of completed hours. So if 2,000 hours have been spent on activities that don’t advance the project then if 9,000 hours have been done on a 10,000 hour project then you have really done 7,000 hours of the 10,000 hour project and

Somehow some developers have interpreted this as meaning that there are no formal processes to be followed. But just because you are putting the priority on working software does not mean that there can be no documentation; just because you are responsive to change doesn’t mean that there is no plan.

Somehow some developers have interpreted this as meaning that there are no formal processes to be followed. But just because you are putting the priority on working software does not mean that there can be no documentation; just because you are responsive to change doesn’t mean that there is no plan.